Neuroscience and artificial intelligence can help improve each other

Finding out more about how the brain works could help programmers translate thinking from the wet and squishy world of biology into all-new forms of machine learning in the digital world.

Despite their names, artificial intelligence technologies and their component systems, such as artificial neural networks, don’t have much to do with real brain science. I’m a professor of bioengineering and neurosciences interested in understanding how the brain works as a system – and how we can use that knowledge to design and engineer new machine learning models.

In recent decades, brain researchers have learned a huge amount about the physical connections in the brain and about how the nervous system routes information and processes it. But there is still a vast amount yet to be discovered.

At the same time, computer algorithms, software and hardware advances have brought machine learning to previously unimagined levels of achievement. I and other researchers in the field, including a number of its leaders, have a growing sense that finding out more about how the brain processes information could help programmers translate the concepts of thinking from the wet and squishy world of biology into all-new forms of machine learning in the digital world.

The brain is not a machine

“Machine learning” is one part of technologies that are often labeled “artificial intelligence.” Machine learning systems are better than humans at finding complex and subtle patterns in very large data sets.

These systems seem to be everywhere – in self-driving cars, facial recognition software, financial fraud detection, robotics, helping with medical diagnoses and elsewhere. But under the hood, they’re all really just variations on a single statistical-based algorithm.

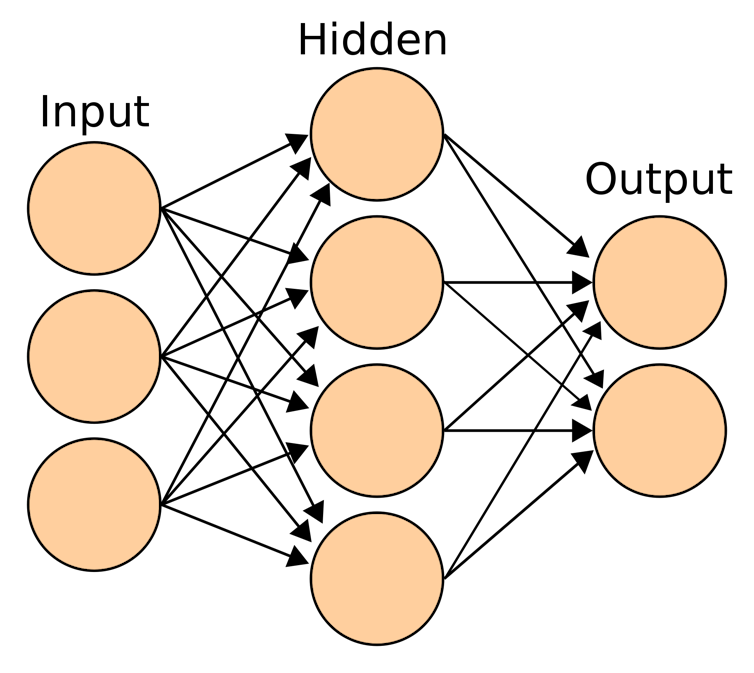

A diagram of a simple artificial neural network.

A diagram of a simple artificial neural network.Cburnett/Wikimedia Commons, CC BY-SA

Artificial neural networks, the most common mainstream approach to machine learning, are highly interconnected networks of digital processors that accept inputs, process measurements about those inputs and generate outputs. They need to learn what outputs should result from various inputs, until they develop the ability to respond to similar patterns in similar ways.

If you want a machine learning system to display the text “This is a cow” when it is shown a photo of a cow, you’ll first have to give it an enormous number of different photos of various types of cows from all different angles so it can adjust its internal connections in order to respond “This is a cow” to each one. If you show this system a photo of a cat, it will know only that it’s not a cow – and won’t be able to say what it actually is.

But that’s not how the brain learns, nor how it handles information to make sense of the world. Rather, the brain takes in a very small amount of input data – like a photograph of a cow and a drawing of a cow. Very quickly, and after only a very small number of examples, even a toddler will grasp the idea of what a cow looks like and be able to identify one in new images, from different angles and in different colors.

But a machine isn’t a brain, either

Because the brain and machine learning systems use fundamentally different algorithms, each excels in ways the other fails miserably. For instance, the brain can process information efficiently even when there is noise and uncertainty in the input – or under unpredictably changing conditions.

You could look at a grainy photo on ripped and crumpled paper, depicting a type of cow you had never seen before, and still think “that’s a cow.” Similarly, you routinely look at partial information about a situation and make predictions and decisions based on what you know, despite all that you don’t.

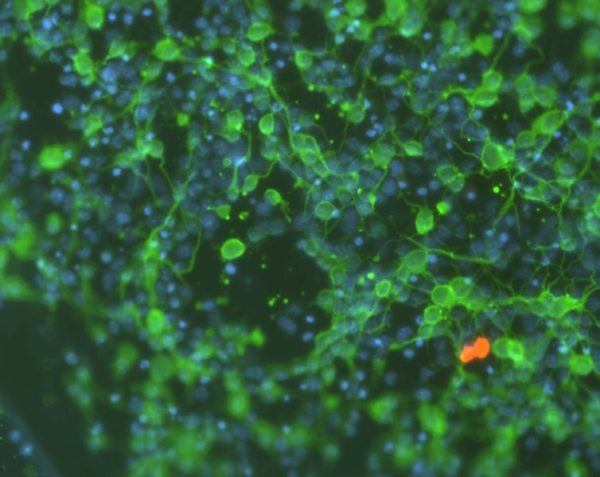

Neuroscientists are still learning how things work inside even this small ‘mini-brain’ cluster of neurons and related cells.

Neuroscientists are still learning how things work inside even this small ‘mini-brain’ cluster of neurons and related cells.Hoffman-Kim lab, Brown University/National Science Foundation

Equally important is the brain’s ability to recover from physical problems, reconfiguring its connections to adapt after an injury or a stroke. The brain is so impressive that patients with severe medical conditions can have as much as half of their brain removed and recover normal cognitive and physical function. Now imagine how well a computer would work with half its circuits removed.

Equally impressive is the brain’s capability to make inferences and extrapolations, the keys to creativity and imagination. Consider the idea of a cow flipping burgers on Jupiter who at the same time is solving quantum gravity problems in its head. Neither of us has any experience of anything like that, but I can come up with it and efficiently communicate it to you, thanks to our brains.

Perhaps most astonishingly, though, the brain does all this with roughly the same amount of power it takes to run a dim lightbulb.

Combining neuroscience and machine learning

In addition to discovering how the brain works, it’s not at all clear which brain processes might work well as machine learning algorithms, or how to make that translation. One way to sort through all the possibilities is to focus on ideas that advance two research efforts at once, both improving machine learning and identifying new areas of neuroscience. Lessons can go both ways, from brain science to artificial intelligence – and back, with AI research highlighting new questions for biological neuroscientists.

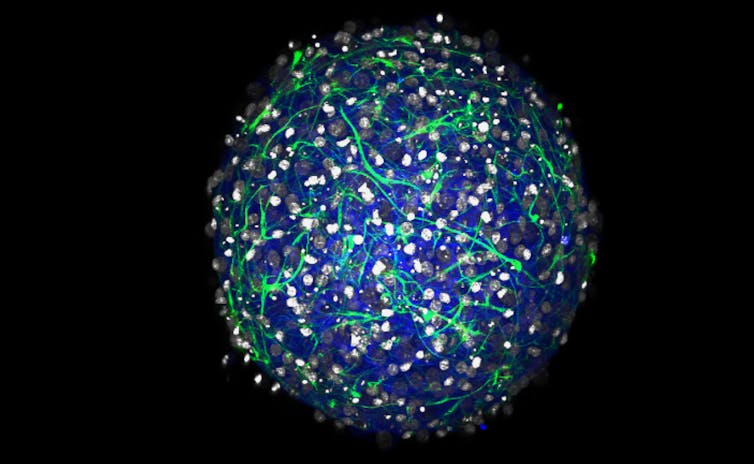

Neurons can grow in very complex shapes.

Neurons can grow in very complex shapes.Juan Gaertner/Shutterstock.com

For example, in my own lab, we have developed a way to think about how individual neurons contribute to their overall network. Each neuron exchanges information only with the other specific neurons it is connected to. It has no overall concept of what the rest of the neurons are up to, or what signals they are sending or receiving. This is true for every neuron, no matter how broad the network, so local interactions collectively influence the activity of the whole.

It turns out that the mathematics that describe these layers of interaction are equally applicable to artificial neural networks and biological neural networks in real brains. As a result, we are developing a fundamentally new form of machine learning that can learn on the fly without advance training that seems to be highly adaptable and efficient at learning.

In addition, we have used those ideas and mathematics to explore why the shapes of biological neurons are so twisted and convoluted. We’ve found that they may develop those shapes to maximize their efficiency at passing messages, following the same computational rules we are using to build our artificial learning system. This was not a chance discovery we made about the neurobiology: We went looking for this relationship because the math told us to.

Taking a similar approach may also inform research into what happens when the brain falls prey to neurological and neurodevelopment disorders. Focusing on the principles and mathematics that AI and neuroscience share can help advance research into both fields, achieving new levels of ability for computers and understanding of natural brains.

Gabriel A. Silva, Professor of Bioengineering and Neurosciences; Founding Director, Center for Engineered Natural Intelligence, University of California San Diego

Este artículo fue publicado originalmente en The Conversation. Lea el original.